Data not shown – Scientific publishing is broken

9 min read

> This is the final piece of my 3-part series on Decentralized Science. Read part 1, ‘Decentralized Science – should Science be crypto-fied?’ and part 2 ‘Creative destruction of scientific funding – a DeSci approach’

Experiment X “failed”. It “didn’t work”, therefore (data not shown).

Every experiment includes controls to ensure that the chosen method has actually worked properly. If your carefully selected control(s) showed up the way you expected multiple times, then the experiment did work. It’s your test sample(s) that didn’t show results to support your hypothesis or fit into the rest of your data to make a ‘complete’ story. We all know that.

Does this all sound too familiar? Why is it culturally accepted to hide our negative data? How has it been normalized that neglecting negative results is good practice? What is it that led us to cherry-pick our data?

Scientific publishing is broken

Authentic and timely knowledge sharing through peer-reviewed scientific publications has evolved to an almost unattainable pursuit.

‘Toxic positivity’

It’s sadly common that only positive results get to see the light. Often, when researchers tried 10 different angles or methodologies to test one hypothesis, the 3 that support the hypothesis get into the manuscript, but the 7 that did not usually die at their lab notebooks. We do not feel comfortable sharing our ‘failed’ experiments openly. Additionally, hypotheses alongside popular claims tend to gain acceptance more easily. Having to give ‘politically right’ answers in science to suit the palate of journal editors creates another layer of pressure on scientists and limits the scientific boundaries we are breaking through. Such “toxic positivity” is highly dysfunctional.

‘Publish or perish’

A mentor once told the young researcher me, “Publications are the currencies in the academic world”. Scientists are feeling the need to push out papers as success in getting funded is heavily tied to metrics such as the h-index, which quantifies the impact of a scientist’s published work. The resulting stress to “publish or perish” incentivizes scientists to focus their attention on already well-cited work rather than on new ideas or on ideas beyond the scientific mainstream. While inadequate funding is upstream of such biases, in which scientists choose their projects, the trickle-down effect reduces the amount of science being done and contributes to issues such as the replication crisis.

> The problems of scientific funding were discussed in my previous post, ‘Creative destruction of scientific funding – a DeSci approach’

Long delays in knowledge sharing

Journal shopping or hopping is now a shared behind-the-scenes story of all papers. The time it takes to publish a paper has become frustratingly long. Scientific information is frequently trapped in cycles of submission, rejection, review, re-review, and re-re-review. This saga eats up months or even years of scientists’ lives, interfere with job, grant and tenure applications, and most importantly, slow down the dissemination of results. The peer-review process has its value and purpose in keeping the high quality and rigor of scientific publishing. But does it have to be this way?

Behind the mystery veil and paywall

Delayed information access may just be the tip of the iceberg. Despite the fact that science is the epitome of a global public good, a lot of scientific knowledge is imprisoned behind journal paywalls. Further, peer-review processes are often behind closed doors. Not to mention, reviewers are also kept anonymous and private to allow honest critiques so that the reviewers do not face scrutiny. This lack of transparency creates the dependency and the need to trust on journal editors in picking the right reviewers for the submitted work and that the process and outcomes were impartial.

Free unpaid labor

Scientific publishing is an enormously lucrative business, mostly managed by publishing conglomerates. The accumulation of profits and global reputations are primarily through the exploitation of free labor of academic scientists and reviewers to publish articles on a purely voluntary basis. Branding market models of reputable for-profit publishing journals often put finances over scientific exchange, inclusion, or equity. Yet, the reliance of scientists’ publishing records on major publishers has trapped the scientific community in an unhealthy co-dependent relationship.

All these problems deeply seated in scientific publishing have certainly led to an uproar among scientists and various initiatives to fix these issues.

Can we fix it?

It’s not a one solution solves all.

Current open science tools

Some journals are already taking actions while witnessing these palpable problems. For example, EMBO reports recently put out an editorial piece on ‘Publishing unpredictable research outcomes’ to encourage sharing of “well-developed null data on particularly noteworthy topics that refute long-standing or prominent claims in the literature”. Since 2021, non-profit open access journal eLife has been publishing papers alongside reports of reviewers and no longer accepts or rejects manuscript after peer review. Cell Press Community Review attempts to speed up the publishing process and help researchers find the right fit for their paper quickly by considering submitted manuscripts simultaneously at multiple Cell Press journals.

In line with the open science efforts, funding agencies and journals require researchers to comply with data mandates. However, such mandates demand the maintenance of data-science centers and obligations for scientists to reformat, deposit and manage all published data such that they are free-to-use, re-use or redistribution. Though with the intent to preserve provenance and openness, the lack of support and funding to sustain this practice have led to some pushbacks from the scientific community.

Universities and academic institutions alike have set up preprint servers and repositories; just to name a few – OSF Preprints, arXiv, bioRxiv, medRxiv, preprints.org. A simple manuscript upload followed by basic quality control checks within a 24h turnover have certainly shortened the delay in knowledge sharing. However, preprint manuscripts can be premature projects with poorly supported data, which requires careful and critical interpretation. Review Commons tries to tackle these shortfalls by providing authors with a refereed preprint and reporting a single round of peer review, which could expedite editorial consideration during submission to publishing journals.

Individual scientists have also started creative ways to share their experimental experiences in newsletter formats – check out Erika’s Updates ‘A Publishing Experiment’, as possible ideas for scientific publishing reform.

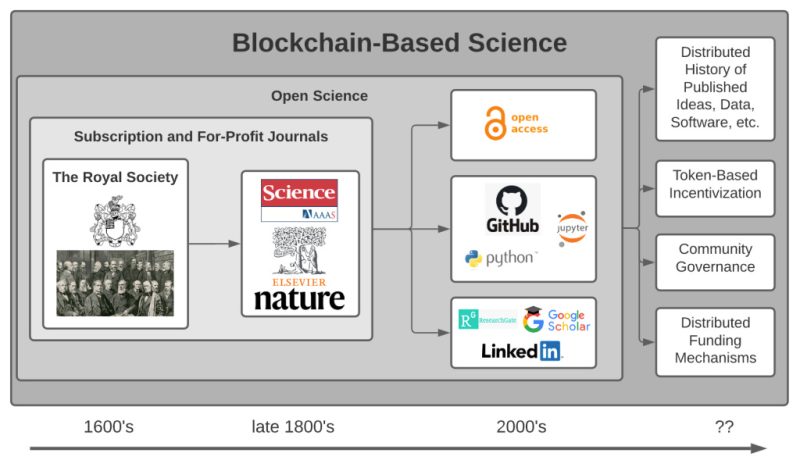

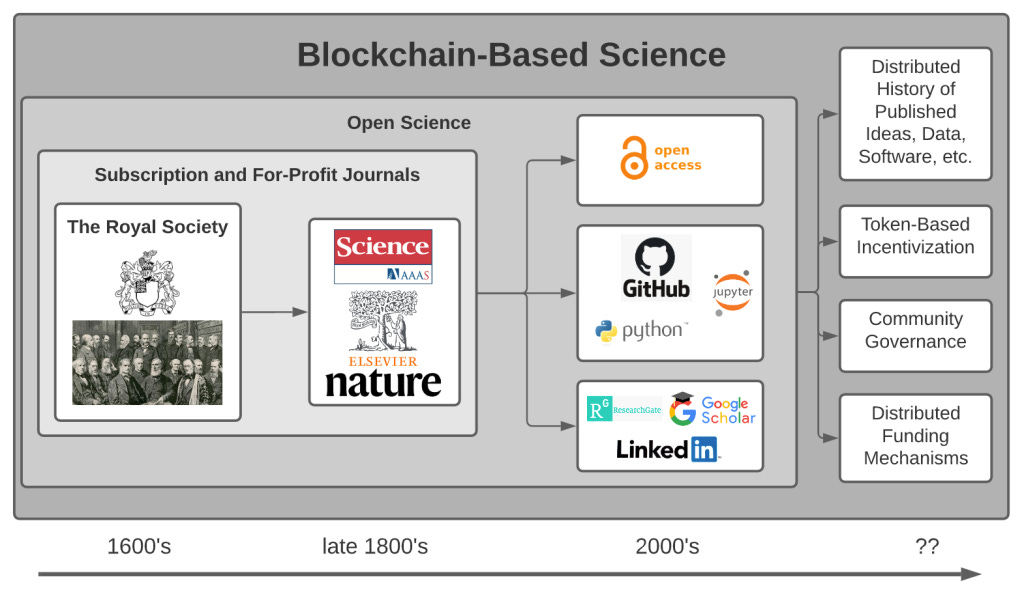

The DeSci approach

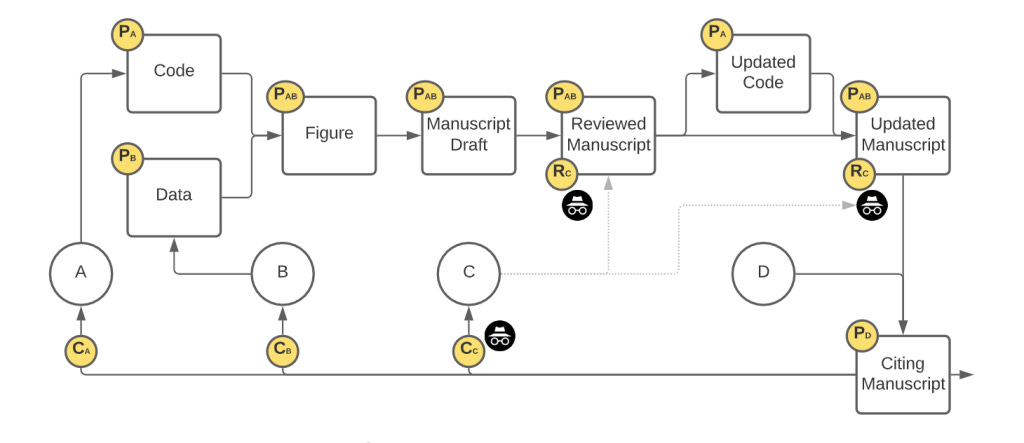

Complementary to these initiatives that still work within the current publishing system, the Decentralized Science (DeSci) approach uses web3 technologies to transform scientific knowledge into digital objects written on-chain. Such tokenization opens the publishing process to be more transparent and realigns incentives of knowledge sharing and intellectual ownership within academic communities. With the lightning speed of the digital world, web3-driven platforms and blockchain-based publishing enable and improve secured data storage, sharing, management, and accessibility, and streamline coordination within the scientific community. All these are achieved without the few elite publishers or critical data centers controlling the space.

Through blockchain-based publishing, any person will be able to benchmark their idea from any place and at any time. Since the blockchain cannot be edited or erased, the evidence that the person had their idea at the time it was published will forever be imprinted, which makes “scooping” of ideas impossible. This also takes away the intermediary power of publishers and lessens the restrictions of publishing, thus encouraging scientists to publish more incrementally and continuously. It gives credibility to publishing all experimental investigations, including those that do not end up in a peer-reviewed publication or results that are ambiguous, like Erika’s Updates as mentioned above, but in a secured manner.

Global academic communities can coordinate through Decentralized Autonomous Organizations (DAOs) to review, update, or cite manuscripts, in which publication, reviews, and citation tokens can be earned as credits for the work. In addition to these publication-specific digital tokens, the tokenization of other educational achievements, teaching performance, intellectual property, etc., will be a more holistic and favorable record measure, in replacement of the current citation-focused h-index.

Final thoughts

The future of blockchain-based decentralized science is near. Although we may still be at its nascent stage, I’m optimistic and excited about the advantages that DeSci approaches will bring to our scientific community, contributing to a more open, transparent, equitable, inclusive, accessible prospect for science.

Et cetera

If you want to learn more about the DeSci approach to publishing, below are some resources that I’ve referenced.

Read:

- Tokenized Thought by Ben Hills <– highly recommended!!

- Schnapp E, Pulverer B. Publishing unpredictable research outcomes. EMBO Rep. 2022;23(9):e55821. doi:10.15252/embr.202255821

- Baer M, Groth A, Lund AH, Sonne-Hansen K. Creativity as an antidote to research becoming too predictable. EMBO J. 2023;42(4):e112835.

- Chu JSG, Evans JA. Slowed canonical progress in large fields of science. Proc Natl Acad Sci U S A. 2021;118(41):e2021636118.

Listen:

queerioushazel

Queer | Vegan | Scientist studying aging | Exploring writing | Curious about everything

0 comments